Workshop Guide

Training Environment

Let’s first agree on: AVS = Azure VMware Solution

Azure Portal Credentials

Replace “#” with your group number.

Connect to https://portal.azure.com with the

following credentials:

Environment Details

Jumpbox Details

Your first task will be creating a Jumpbox in your respective Jumpbox

Resource group.

NOTE: In addition to the instructions below, you can watch this video which will explain the same steps and get you ready for deploying the Jumpbox.

The following table will help you identify the Resource Group, Virtual Network (vNET) and Subnet in which you will be deploying your Jumpbox VM.

Replace the word ‘Name’ with Partner name, and ‘X’ with your Group Number

| Group | Jumpbox Resource Group | Virtual Network/Subnet |

|---|

| X | GPSUS-NameX-Jumpbox | GPSUS-NameX-VNet/JumpBox |

Example - Partner name is ABC and Group number is 2:

| Group | Jumpbox Resource Group | Virtual Network/Subnet |

|---|

| 2 | GPSUS-ABC2-Jumpbox | GPSUS-Name2-VNet/JumpBox |

Exercise 1: Instructions for Creation of Jumpbox

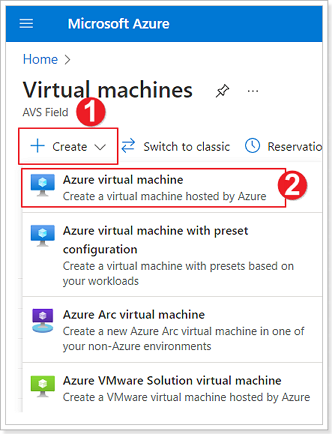

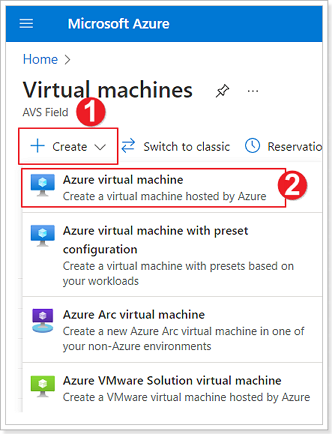

Step 1: Create Azure Virtual Machine

In the Azure Portal locate the Virtual Machines blade.

- Click + Create.

- Select Azure virtual machine.

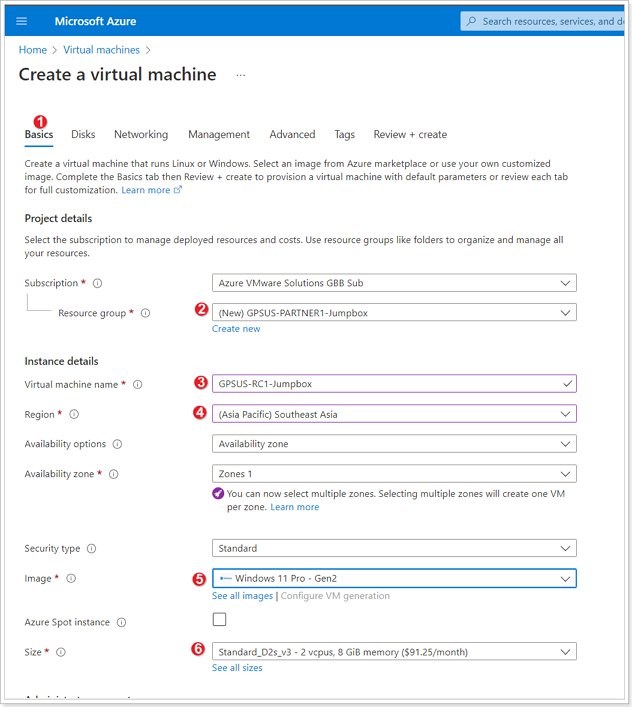

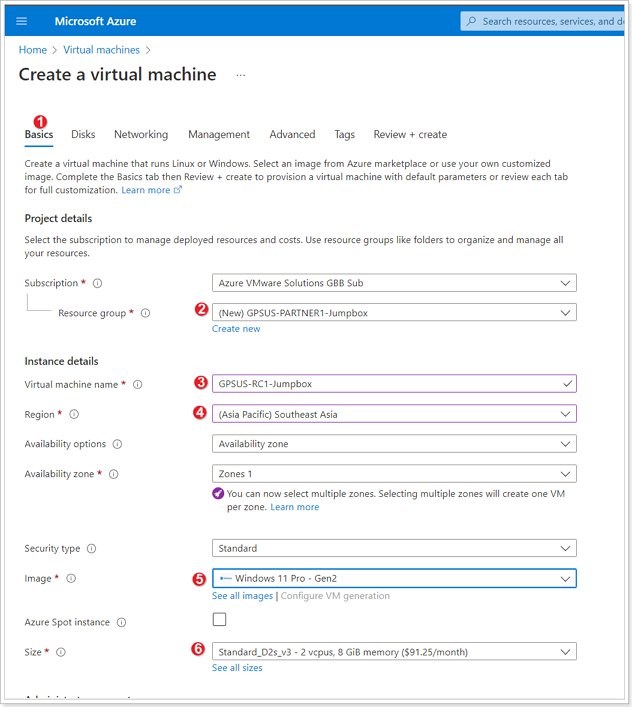

Important notes about the next step

The next step, could be confusing and often, a source of mistakes. Please, pay special attention to the notes at the bottom of the image.

Please notice that PARTNER1 is just a PLACEHOLDER most likely for your organization’s name. Reach out to the moderators for guidance

Do not create a new resource group, please use the existing one. See the notes below about the correct resource group to choose from

Also, once you select the correct resource group the region will be defaulted (populated) with the right value

- Select Basics tab.

- Select the appropriate Resource group per the table below.

- Give your Jumpbox a unique name you wish. As suggestion you can use your name initials with the word Jumpbox (i.e. for John Smith it will be JS-Jumpbox)

- Ensure the appropriate Region is selected. Usually it is the default region given you have selected the right Resource Group (see step #1).

- Select the type of the VM image.

Operating System: Windows 10 or Windows 11

- Ensure the correct Size is selected.

Size: Standard D2s v3 (2vCPUs, 8GiB memory)

Leave other defaults and scroll down on the Basics tab.

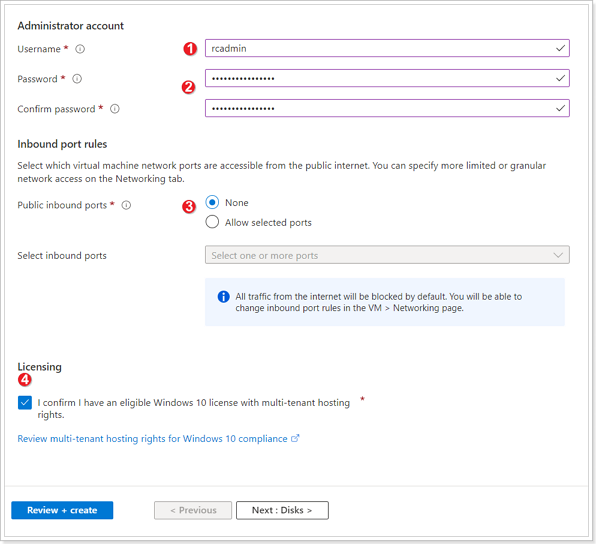

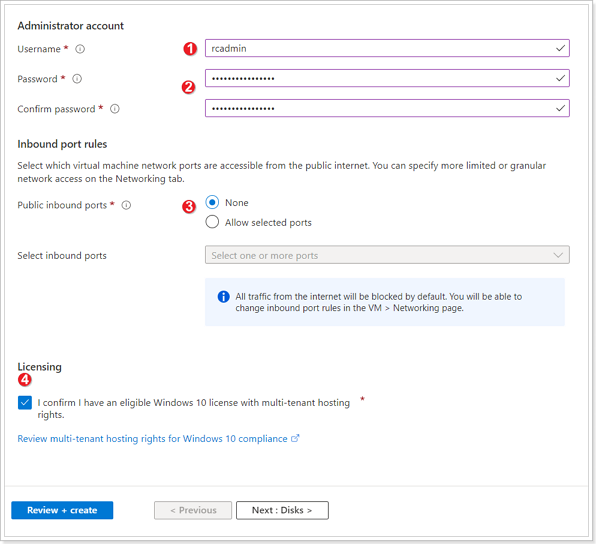

- Enter a user name for your Jumpbox (Anything of your choosing).

- Enter and confirm a password for your Jumpbox user.

- Ensure to select “None” for Public inbound ports.

- Select checkbox for “I confirm I have an eligible Windows 10 license”.

Leave all other defaults and jump to Networking tab.

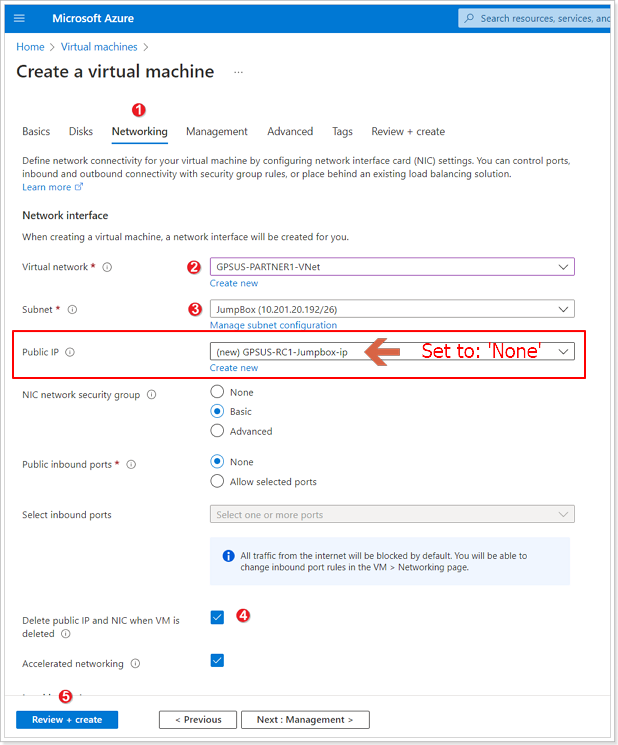

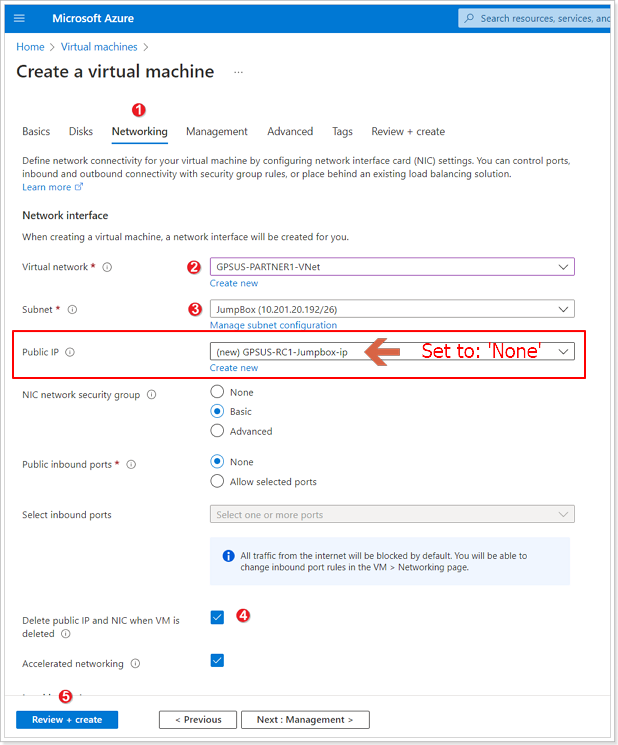

Make sure to select ‘None’ next to the Public IP field.

- Click on Networking tab.

- Select the appropriate VNet based on the table below.

NOTE: This is not the VNet that is loaded by default.

- If the appropriate VNet was selected it should auto-populate the JumpBox subnet. If not, please make sure to select the JumpBox Subnet.

NOTE: Please select None for Public IP. You will not need it. Instead, you’ll be using Azure Bastion, which is already deployed, to access (RDP) into the JumpBox VM.

- Select “Delete public IP and NIC when VM is deleted” checkbox.

- Click Review + Create -> Create.

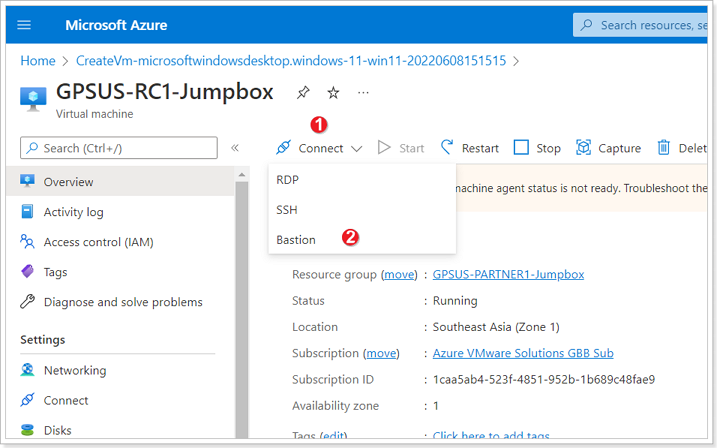

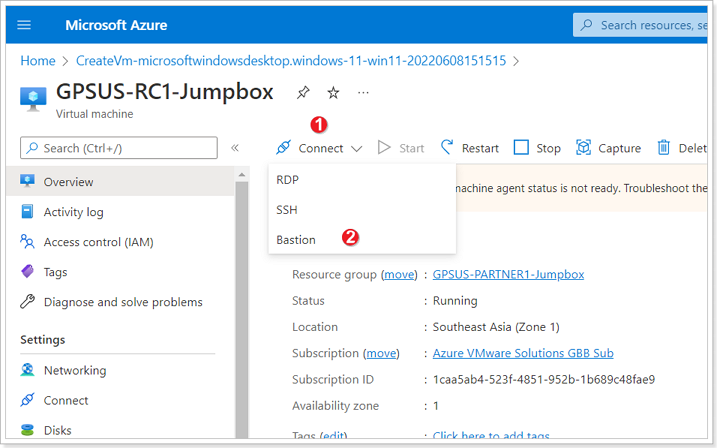

Step 4: Connect to your Azure Virtual Machine Jumpbox

Once your Jumpbox finishes creating, go to it and click: Connect → Bastion

This should open a new browser tab and connect you to the Jumpbox, enter the Username and Password you specified for your Jumpbox.

Make sure you allow pop-ups in your Internet browser for Azure Bastion to work properly.

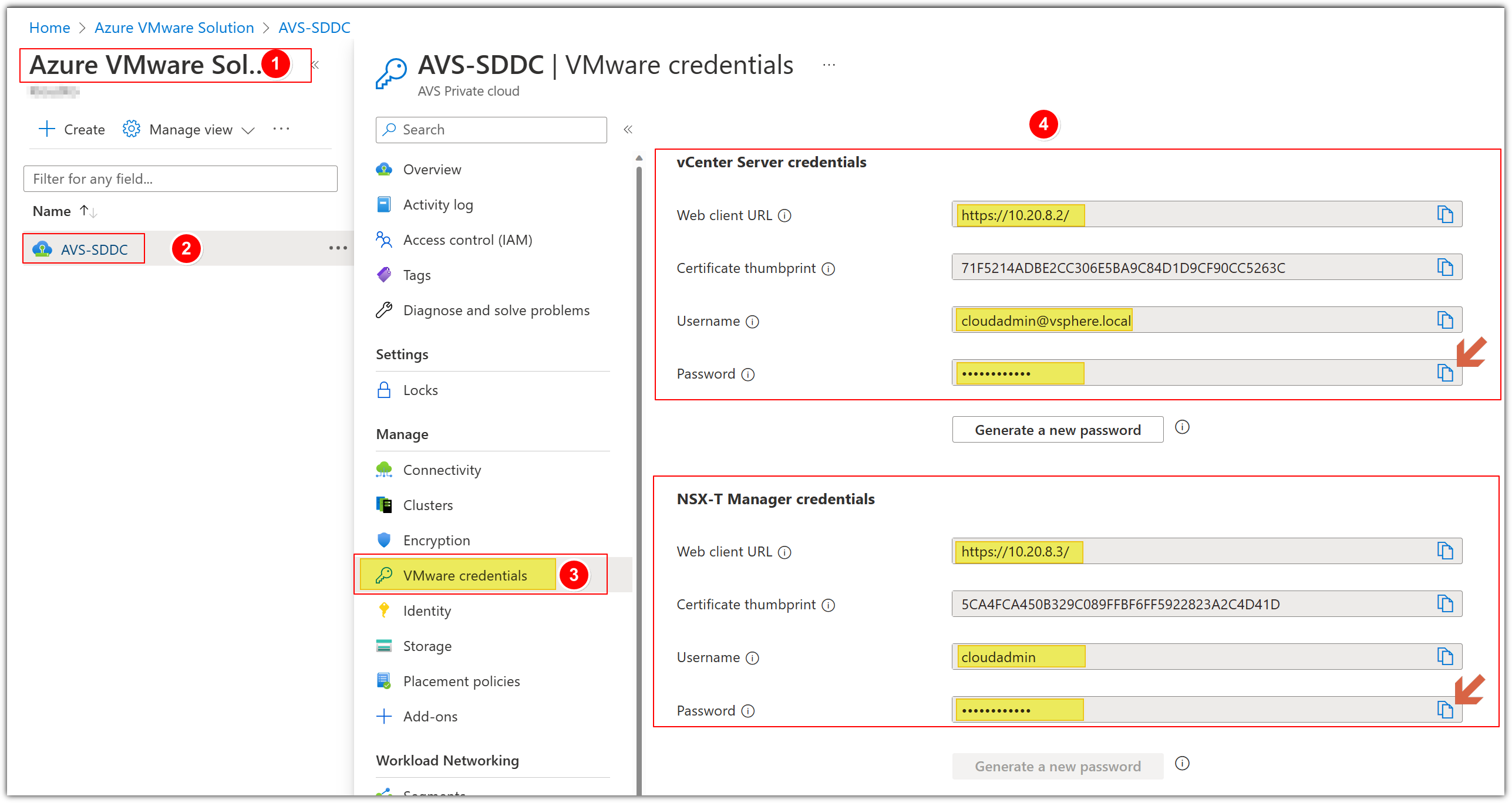

VMware Environments

AVS vCenter, HCX, and NSX-T URLs

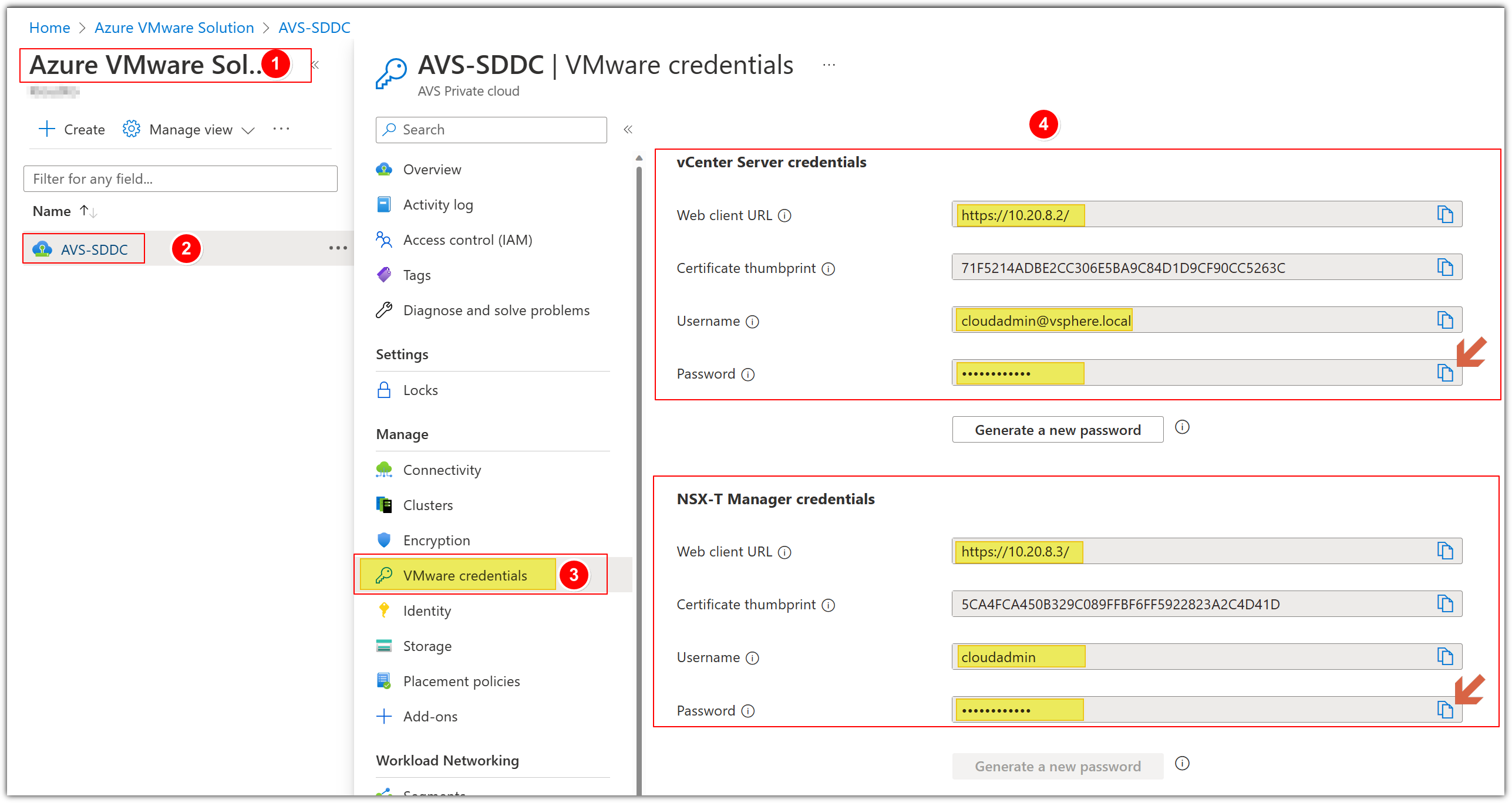

Please refer to the VMware Credentials section under the AVS blade in the Azure portal to retrieve URLs and Login information for vCenter, HCX, and NSX-T.

NOTE: Use the same vCenter credentials to access HCX portal if needed.

PLEASE DO NOT CLICK GENERATE A NEW PASSWORD BUTTON UNDER CREDENTIALS IN AZURE PORTAL

Note: In a real customer environment, the local

cloudadmin@vsphere.local account should be

treated as an emergency access account for “break glass” scenarios in your

private cloud. It’s not for daily administrative activities or integration with

other services. For more information see

here

In AVS you can predict the IP addresses for vCenter (.2), NSX-T Manager (.3)

and HCX (.9). For instance, if you choose 10.20.0.0/22 as your AVS Management CIDR block, the IPs will be as following:

| vCenter | NSX-T | HCX |

|---|

| 10.20.0.2 | 10.20.0.3 | 10.20.0.9 |

On-Premises VMware Lab Environment

If you are in a group with multiple participants you will be assigned a participant number.

Replace X with your group number and Y with your participant number.

| Group | Participant | vCenter IP | Username | Password | Web workload IP | App Workload IP |

|---|

| X | Y | 10.X.Y.2 | administrator@avs.lab | MSFTavs1! | 10.X.1Y.1/25 | 10.X.1Y.129/25 |

Example for Group number 1 with 4 participants

Credentials for the Workload VM/s

| Username | root |

|---|

| Password | 1TestVM!! |

1 - Module 1 Setup AVS Connectivity

Introduction Module 1

Azure VMware Solution offers a private cloud environment accessible from On-Premises and Azure-based resources. Services such as Azure ExpressRoute, VPN connections, or Azure Virtual WAN deliver the connectivity.

Scenario

Customer needs to have connectivity between their workloads in AVS, existing services and workloads in Azure, and access to the internet.

Connectivity Options for AVS

This hands-on lab will show you how to configure the Networking components of an Azure VMware Solution for:

- Connecting Azure VNet’s to AVS over an ExpressRoute circuit (Preconfigured).

- Peering with remote environments using Global Reach (Not Applicable in this lab).

- AVS Interconnect Options

- Configuring NSX-T (check DNS and configure DHCP, Segments, and Gateway) to manage connectivity within AVS.

The lab environment has a preconfigured Azure VMware Solution environment with an Express Route circuit. A nested or embedded VMware environment is configured to simulate an On-Premises environment (PLEASE DO NOT TOUCH).

Both environments are accessible through JumpBox VM that you can deploy in Azure. You can RDP to Jumpbox through a preconfigured Azure Bastion service.

After this lab is complete, you will have built out this scenario below:

- ExpressRoute, for connectivity between Azure VMware Solution and Azure Virtual Networks.

- Configure NSX-T to establish connectivity within the AVS environment.

- Creation of Test VMs to attach to your NSX-T Network Segments.

- Explore some advanced NSX-T features like tagging, creation of groups, Distributed Firewall Features.

1.1 - Module 1 Task 1

Task 1 - AVS Connectivity Options

AVS Connectivity Options

THIS IS FOR REFERENCE ONLY AS IT HAS BEEN PRECONFIGURED FOR THIS LAB.

Section Overview

In this section you will create a connection between an existing, non-AVS,

Virtual Network in Azure and the Azure VMware Solution environment. This allows

the jumpbox virtual machine you created to manage key components in the VMware management

plane such as vCenter, HCX, and NSX-T. You will also be able to access Virtual

Machines deployed in AVS and allow those VMs to access resources deployed in

the Hub or Spoke VNet’s, such as Private Endpoints and other Azure VMs or

Services.

Summary: Generate a new Authorization Key in the AVS ExpressRoute settings,

and then create a new Connection from the Virtual Network Gateway in the VNet

where the JumpBox is connected to.

The diagram below shows the respective resource groups for your lab environment.

You will replace Name with Partner Name, for example: GPSUS-Name1-SDDC for partner XYZ would be GPSUS-XYZ1-SDDC.

Option 1: Internal ExpressRoute Setup from AVS -> VNet

NOTE:

- Since we already have a virtual network gateway, you’ll add a connection between it and your Azure VMware Solution private cloud.

- The last step of this section is expected to fail, the Connection will be created but it will be in Failed state because another Connection to the same target already exists. This is expected behavior and you can ignore the error.

Step1: Request an ExpressRoute authorization key

In your AVS Private Cloud:

- Click Connectivity.

- Click ExpressRoute tab.

- Click + Request an authorization key.

Step 2: Name Authorization Key

- Give your authorization key a name: group-XY-key, where X is your group number, and Y is your participant number.

- Click Create. It may take about 30 seconds to create the key. Once created, the new key appears in the list of authorization keys for the private cloud.

Copy the authorization key and ExpressRoute ID and keep it handy. You will need them to complete the peering. The authorization key disappears after some time, so copy it as soon as it appears.

Step 3: Create connection in VNet Gateway

- Navigate to the Virtual Network Gateway named GPSUS-Name#-Network where # is your group number.

- Click Connections.

- Click + Add.

Step 4: Establish VNet Gateway Setup

Enter a Name for your connection. Use GROUPXY-AVS where X is your group number and Y is your participant number.

For Connection type select ExpressRoute.

Ensure the checkbox next to “Redeem authorization” is selected.

Enter the Authorization key you copied earlier.

For Peer circuit URI paste the ExpressRoute ID you copied earlier.

Click OK.

The connection between your ExpressRoute circuit and your Virtual Network is

created.

Reminder: It is expected that the connection is in Failed State after

the creation, that is because another connection to the same target already

exists. Next, delete the connection.

Step 5: Delete connection

- Navigate to your Virtual Network Gateway named **GPSUS-NameX-GW where X is your group number.

- Click Connections.

- Select the 3 ellipses next to the connection with the status of Failed and select Delete.

Option 2: ExpressRoute Global Reach Connection from AVS -> Customer’s on-premises ExpressRoute

ExpressRoute Global Reach connects your on-premises environment to your Azure VMware Solution private cloud. The ExpressRoute Global Reach connection is established between the private cloud ExpressRoute circuit and an existing ExpressRoute connection to your on-premises environments. Click here for more information.

Step 1: ExpressRoute Circuits in Azure Portal

NOTE: There are no ExpressRoute circuits setup in this environment. These steps are informational only.

- In the Azure Portal search bar type ExpressRoute.

- Click ExpressRoute circuits.

Step 2: Create ExpressRoute Authorization

- From the ExpressRoute circuits blade, click Authorizations.

- Give your authorization key a Name.

- Click Save. Copy the Authorization Key created and keep it handy.

- Also copy the Resource ID for the ExpressRoute circuit and keep it handy.

Step 3: Create Global Reach Connection in AVS

- From your AVS Private Cloud blade, click Connectivity.

- Click ExpressRoute Global Reach.

- Click + Add.

- In the ExpressRoute circuit box, paste the Resource ID copied in the previous step.

- Paste the Authorization Key created in the previous step.

- Click Create.

Option 3: AVS Interconnect

The AVS Interconnect feature lets you create a network connection between two or more Azure VMware Solution private clouds located in the same region. It creates a routing link between the management and workload networks of the private clouds to enable network communication between the clouds. Click here for more information.

Step 1: Establish AVS Interconnect in your AVS SDDC

- In your AVS Private Cloud blade, click Connectivity.

- Click AVS Interconnect.

- Click + Add.

Step 2: Add connection to another private cloud

- Subscription and Location are automatically populated based on the values of your Private Cloud, ensure these are correct.

- Select the Resource group of the other Private Cloud you would like to connect to.

- Select the AVS Private cloud you wish to connect.

- Ensure the checkbox next to “I confirm that the two private clouds to be connected don’t contain overlapping network address space”.

- Click Create.

It takes a few minutes for the connection to complete. Once completed the networks found in both Private Clouds will be able to talk to each other. Feel free to perform this exercise if no one in your group has done it as there is a requirement to connect a second Private Cloud in order to perform the exercises in Module 3 (Site Recovery Manager).

Confirm access from Jumpbox

You can now validate access to your Azure VMware Solution components like vCenter and NSX-T from the Jumpbox you created.

Step 1: Obtain AVS Login Credentials

- Navigate to the Azure VMware Solution blade associated with your group: GPSUS-Name#-SDDC.

- Click your assigned AVS SDDC.

- Click Identity.

- You will now see the Login Credentials for both vCenter and NSX-T. You will need these credentials for the next few steps. You do not need to copy the Certificate thumbprint.

PLEASE DO NOT GENERATE A NEW PASSWORD.

Step 2: Access AVS from Jumpbox

Click Connect and Bastion from the previously created Jumpbox blade.

Once connected to your Jumpbox, open a browser and enter the IP Address for AVS vCenter located in a previous step. There might be a secure browser connection message. Click the advanced button and select the option to continue. Then click on LAUNCH VSPHERE CLIENT (HTML 5).

If the VMware vSphere login page launches successfully, then everything is working as expected.

You’ve now confirmed that you can access AVS from a remote environment

References

Tutorial - Configure networking for your VMware private cloud in Azure - Azure

VMware Solution | Microsoft

Docs

1.2 - Module 1 Task 2

Task 2: Configure NSX-T to establish connectivity within AVS

NSX-T on AVS

After deploying Azure VMware Solution, you can configure an NSX-T network

segment from NSX-T Manager or the Azure portal. Once configured, the segments

are visible in Azure VMware Solution, NSX-T Manager, and vCenter.

NSX-T comes pre-provisioned by default with an NSX-T Tier-0 gateway in

Active/Active mode and a default NSX-T Tier-1 gateway in Active/Standby mode.

These gateways let you connect the segments (logical switches) and provide

East-West and North-South connectivity. Machines will not have IP addresses

until statically or dynamically assigned from a DHCP server or DHCP relay.

In this Section, you will learn how to:

Create additional NSX-T Tier-1 gateways.

Add network segments using either NSX-T Manager or the Azure portal

Configure DHCP and DNS

Deploy Test VMs in the configured segments

Validate connectivity

In your Jumpbox, open a browser tab and navigate to the NSX-T URL found in the AVS Private Cloud blade in the Azure Portal. Login using the appropriate credentials noted in the Identity tab.

NOTE: This task is done by default for every new AVS deployment

AVS DNS forwarding services run in DNS zones and enable workload VMs in the zone

to resolve fully qualified domain names to IP addresses. Your SDDC includes

default DNS zones for the Management Gateway and Compute Gateway. Each zone

includes a preconfigured DNS service. Use the DNS Services tab on the DNS

Services page to view or update properties of DNS services for the default

zones. To create additional DNS zones or configure additional properties of DNS

services in any zone, use the DNS Zones tab.

The DNS Forwarder and DNS Zone are already configured for this training but

follow the steps to see how to configure it for new environments.

- Ensure the POLICY view is selected.

- Click Networking.

- Click DNS.

- Click DNS Services.

- Click the elipsis button -> Edit to view/edit the default DNS settings.

- Examine the settings (do not change anything) and click CANCEL.

Exercise 2: Add DHCP Profile in AVS Private Cloud

Please ensure to replace X with your group’s assigned number, Y with your participant number. For participant 10 please replace XY with 20

| AVS NSX-T Details | |

|---|

| DHCP Server IP | 10.XY.50.1/30 |

| Segment Name | WEB-NET-GROUP-XY |

| Segment Gateway | 10.XY.51.1/24 |

| DHCP Range | 10.XY.51.4-10.XY.51.254 |

A DHCP profile specifies a DHCP server type and configuration. You can use the

default profile or create others as needed.

A DHCP profile can be used to configure DHCP servers or DHCP relay servers

anywhere in your SDDC network.

Step 1: Add DHCP Profile

- In the NSX-T Console, click Networking.

- Click DHCP.

- Click ADD DHCP PROFILE.

- Name the profile as DHCP-Profile-GROUP-XY-AVS for your respective group/participant.

- Ensure DHCP Server is selected.

- Specify the IPv4 Server IP Address as 10.XY.50.1/30 and optionally change the Lease Time or leave the default.

- Click SAVE.

Exercise 3: Create an NSX-T T1 Logical Router

NSX-T has the concept of Logical Routers (LR). These Logical Routers can perform both distributed or centralized functions. In AVS, NSX-T is deployed and configured with a default T0 Logical Router and a default T1 Logical Router.

The T0 LR in AVS cannot be manipulated by AVS customers, however the T1 LR can be configured however an AVS customer chooses. AVS customers also have the option to add additional T1 LRs as they see fit.

Step 1: Add Tier-1 Gateway

- Click Networking.

- Click Tier-1 Gateways.

- Click ADD TIER-1 GATEWAY.

- Give your T1 Gateway a Name. Use GROUP-XY-T1.

- Select the default T0 Gateway, usually TNT**-T0.

- Click SAVE. Clck NO to the question “Want to continue configuring this Tier-1 Gateway?”.

Exercise 4: Add the DHCP Profile to the T1 Gateway

- Click the elipsis next to your newly created T1 Gateway.

- Click Edit.

Step 1: Set DHCP Configuration to Tier-1 Gateway

- Click Set DHCP Configuration.

- After finishing the DHCP Configuration, click to expand Route Advertisement and make sure all the buttons are enabled.

- Ensure DHCP Server is selected for Type.

- Select the DHCP Server Profile you previously created.

- Click SAVE. Click SAVE again to confirm changes, then click CLOSE EDITING.

Exercise 5: Create Network Segment for AVS VM workloads

Network segments are logical networks for use by workload VMs in the SDDC

compute network. Azure VMware Solution supports three types of network segments:

routed, extended, and disconnected.

A routed network segment (the default type) has connectivity to other

logical networks in the SDDC and, through the SDDC firewall, to external

networks.

An extended network segment extends an existing L2VPN tunnel, providing a

single IP address space that spans the SDDC and an On-Premises network.

A disconnected network segment has no uplink and provides an isolated

network accessible only to VMs connected to it. Disconnected segments are

created when needed by HCX. You can also create them yourself and can

convert them to other segment types.

Step 1: Add Network Segment

- Click Networking.

- Click Segments.

- Click ADD SEGMENT.

- Enter WEB-NET-GROUP-XY in the Segment Name field.

- Select the Tier-1 Gateway you created previously (GROUP-XY-T1) as the Connected Gateway.

- Select the pre-configured overlay Transport Zone (TNTxx-OVERLAY-TZ).

- In the Subnets column, you will enter the IP Address for the Gateway of the Subnet that you are creating, which is the first valid IP of the Address Space.

- For Example: 10.XY.51.1/24

- Then click SET DHCP CONFIG.

Step 3: Set DHCP Configuration on Network Segment

- Ensure Gateway DHCP Server is selected for DHCP Type.

- In the DHCP Config click the toggle button to Enabled.

- Then in the DHCP Ranges field enter the range according to the IPs assigned to your group. The IP in in the same network as the Gateway defined above.

- Use 10.XY.51.4-10.XY.51.254

- In the DNS Servers, enter the IP 1.1.1.1.

- Click Apply. Then SAVE and finally NO.

Important

The IP address needs to be on a non-overlapping RFC1918 address block, which ensures connection to the VMs on the new segment.

References

1.3 - Module 1 Task 3

Create Test VMs and connect to Segment

Create Test VMs

Now that we have our networks created, we can deploy virtual machines and ensure we can get an IP address from DHCP. Go ahead and Login into your AVS vCenter.

Exercise 1: Create a content Library

Step 1: Create vCenter Content Library

- From AVS vCenter, click the Menu bars.

- Click Content Libraries.

Click CREATE

Step 2: Give your Content Library a Name and Location

- Name your Library LocalLibrary-XY where X is your group number and Y is your participant number

- Click NEXT

- Leave the defaults for Configure content library and for Appy security policy

Step 3: Specify Datastore for Content Library

- For Add storage select thevsanDatastore

- Click NEXT then FINISH

Exercise 2: Import Item to Content Library

Step 1: Import OVF/OVA to Content Library

- Click on your newly created Library and click Templates.

- Click OVF & OVA Templates

- Click ACTIONS

- Click Import item

Step 2: Specify URL for OVF/OVA

Import using this URL - Download Link

https://gpsusstorage.blob.core.windows.net/ovas-isos/workshop-vm.ova

This will now download and import the VM to the library

Exercise 3: Create VMs

Step 1: Create VM from Template

Once downloaded, Right-click the VM Template > New VM from This Template.

Step 2: Select a Name and Folder for the VM

- Give the VM a name – e.g VM1-AVS-XY

- Select the SDDC-Datacenter

- Click NEXT

Step 3: Select a Compute Resource

- Select Cluster-1

- Click NEXT

Step 4: Review Details, select Datastore

- Review Details and click NEXT. Accept the terms and click NEXT

- Confirm the storage as the vsanDatastore

- Click NEXT

Step 5: Select network for VM

Select the segment that you created previously- “WEB-NET-GROUP-XY” and click NEXT. Then review and click FINISH.

Once deployed, head back to VM’s and Templates and Power On this newly created VM. This VM is provided as a very lightweight Linux machine that will automatically pick up DHCP if configured. Since we have added this to the WEB-NET-GROUP-XY segment, it should get an IP address from this DHCP range. This usually takes few seconds. Click the “Refresh” button on vCenter toolbar.

If you see an IP address here, we have successfully configured the VM and it has connected to the segment and will be accessible from the Jumpbox.

We can confirm this by SSH’ing to this IP address from the Jumpbox.

Username: root

Password: AVSR0cks!

YOU MAY BE ASKED TO CHANGE THE PASSWORD OF THE ROOT USER ON THE VM, CHANGE IT TO A PASSWORD OF YOUR CHOOSING, JUST REMEMBER WHAT THAT PASSWORD IS.

Once you SSH into the VMs, enter these 2 commands to enable ICMP traffic on the VM:

iptables -A OUTPUT -p icmp -j ACCEPT

iptables -A INPUT -p icmp -j ACCEPT

PLEASE REPEAT THESE STEPS AND CREATE A SECOND VM CALLED ‘VM2-AVS-XY’

1.4 - Module 1 Task 4

Task 4: Advanced NSX-T Features within AVS

Section Overview:

You can find more information about NSX-T capabilities in VMware’s website under VMware NSX-T Data Center Documentation.

In this Section, you will learn just a few additional NSX-T Advanced Features. You will learn how to:

Create NSX-T tags for VMs

Create NSX-T groups based on tags

Create Distributed Firewall Rules in NSX-T

NSX-T Tags help you label NSX-T Data Center objects so that you can quickly search or filter objects, troubleshoot and trace, and do other related tasks.

You can create tags using both the NSX-T UI available within AVS and APIs.

More information on NSX-T Tags can be found here: VMware NSX-T Data Center Documentation.

Two Test VMs required

Please make sure that you have created 2 Test VMs as explained in the previous Task before you proceed in this exercise.

- From the NSX-T UI, click Inventory.

- Click Virtual Machines.

- Locate your 2 Virtual Machines you created in the previous task, notice they have no tags.

Click the elipsis next to the first VM and click Edit.

Step 2: Name your VM’s tag

- Type “GXY”, where X is your group number and Y is your participant number.

- Click to add GXY as a tag to this VM.

- Click SAVE.

REPEAT THE ABOVE STEPS FOR VM2 USING THE SAME TAG.

NSX-T Groups

Groups include different objects that are added both statically and dynamically, and can be used as the source and destination of a firewall rule.

Groups can be configured to contain a combination of Virtual Machines, IP sets, MAC sets, segment ports, segments, AD user groups, and other groups. Dynamic including of groups can be based on a tag, machine name, OS name, or computer name.

You can find more information on NSX-T Groups on VMware’s NSX-T Data Center docs.

Exercise 2: Create NSX-T Groups

Step 1: Create an NSX-T Group

Now that we’ve assigned tags to the VMs, we’ll create a group based of those tags.

- Click Inventory

- Click Groups

- Click ADD GROUP

Step 2: Name your NSX-T Group and Assign Members

- Name your group GROUP-XY where X is your group number and Y is your participant number

- Click on *Set Members

Step 3: Set the Membership Criteria for your Group

- Click ADD CRITERIA

- Select Virtual Machine

- Select Tag

- Select Equals

- Select your previously created group GXY

- Click APPLY. Then click SAVE

NSX-T Distributed Firewall

NSX-T Distributed Firewall monitors all East-West traffic on your AVS Virtual Machines and allows you to either deny or allow traffic between these VMs even if the exist on the same NSX-T Network Segment. This is the example of your 2 VMs and we will assume they’re 2 web servers that should never have to talk to each other. More information can be found here: Distributed Firewall.

Exercise 3: Create an NSX-T Distributed Firewall Policy

Step 1: Add a Policy

- Click Security

- Click Distributed Firewall

- Click + ADD POLICY

- Give your policy a name “Policy XY” where X is your group number and Y is your participant number

- Click the elipsis and select Add Rule

Step 2: Add a Rule

- Name your rule “Rule XY”

- Click under Sources column and select your newly created “GROUP-XY”

- Click under Destinations and also select “GROUP-XY”

- Leave all other defaults, and for now, leave the Action set to Allow. We will change this later to understand the behavior

- Click PUBLISH to publish the newly created Distributed Firewall Rule

Step 3: Reject communication in your Distributed Firewall Rule

Hopefully you still have the SSH sessions open to your 2 VMs you created earlier. If not, just SSH again. From one of the VMs, run a continuous ping to the other VM’s IP address like the example below.

- Change the Action in your Rule to Reject

- Click PUBLISH

You can notice that after publishing the change to Reject on your rule, the ping now displays “Destination Host Prohibited”. NSX-T DFW feature is allowing the packet to get from one VM to another but it rejects it once the second VM receives the packet. You can also change this option to Drop where the packet is completely dropped by the second VM.

2 - Module 2 - HCX for VM Migration

Module 2: Deploy HCX for VM Migration

Introduction to VMware HCX

VMware HCX™ is an application mobility platform designed for simplifying application migration, workload rebalancing and business continuity across data centers and clouds. HCX supports the following types of migrations:

- Cold Migration - Offline migration of VMs.

- Bulk Migration - scheduled bulk VM (vSphere, KVM, Hyper-V) migrations with reboot – low downtime.

- HCX vMotion - Zero-downtime live migration of VMs – limited scale.

- Cloud to Cloud Migrations – direct migrations between VMware Cloud SDDCs moving workloads from region to region or between cloud providers.

- OS Assisted Migration – bulk migration of KVM and Hyper-V workloads to vSphere (HCX Enterprise feature).

- Replication Assisted vMotion - Bulk live migrations with zero downtime combining HCX vMotion and Bulk migration capabilities (HCX Enterprise feature).

In this module, we will go through the steps to Install HCX, configure and migrate a test VM to Azure VMware Solution (AVS).

For more information on HCX, please visit VMware’s HCX Documentation.

HCX Setup for Azure VMware Solution (AVS)

Prerequisites

- Ensure that Module 1 has been completed successfully as this will be required to connect HCX from AVS to the On-Premises Lab.

- Ability to reach out to vCenter portal from Jumpbox VM:

- AVS vCenter: Get IP from Azure Portal - AVS blade

- On-premises vCenter: 10.X.Y.2

Remember that X is your group number and Y your participant number.

2.1 - Module 2 Task 1

Task 1 : Install VMware HCX on AVS Private Cloud

Exercise 1: Enable HCX on AVS Private Cloud

In the following task, we will be installing HCX on your AVS Private Cloud. This is a simple process from the Add-ons section in the Azure Portal, or via Bicep/ARM/PowerShell.

NOTE: This task may or may not have been completed for you in your AVS environment. Only one participant per group can enable HCX in the SDDC so if you’re not the first participant in the group to enable HCX, just use these instructions for reference.

Step 1: Navigate to your SDDC

- Navigate to the Azure Portal, search for Azure VMware Solution in the search bar.

- Click on Azure VMware Solution.

Step 2: Locate your AVS SDDC

- Select the private cloud assigned to you or your group.

Step 3: Enable HCX on your AVS Private Cloud

- From your Private Cloud blade, click on + Add-ons.

- Click Migration using HCX.

- Select the checkbox to agree with terms and conditions.

- Click Enable and deploy.

HCX will start getting deployed in your AVS Private Cloud and it should take about 10-20 minutes to complete.

2.2 - Module 2 Task 2

Task 2: Download the HCX OVA to On-Premises vCenter

You will perform the instructions below from AVS VMware Environment

Exercise 1: Download HCX OVA for Deployment of HCX on-premises

The next step is to download HCX onto our On-Premises VMware environment, this will allow us to setup the connectivity to AVS and allow us to migrate. The HCX appliance is provided by VMware and has to be requested from within the AVS HCX Manager.

- Obtain the AVS vCenter credentials by going to your AVS Private Cloud blade in the Azure portal, select VMware credentials.

- cloudadmin@vsphere.local is the local vCenter user for AVS, keep this handy.

- You can copy the Admin password to your clipboard and keep it handy as well.

Step 3: Locate HCX Cloud Manager IP

- In your AVS Private Cloud blade, click + Add-ons.

- Click Migration using HCX.

- Copy the HCX Cloud Manager IP URL, open a new browser tab and paste it, and enter the cloudadmin credentials obtained above.

Step 4: Request HCX OVA Download Link

The screenshot below is from AVS VMware Environment

The Request Download Link button will be grayed out initially but will be live after a minute or two. Do not navigate away from this page. Once available, you will have an option to Download the OVA or Copy a Link.

This link is valid for 1 week.

2.3 - Module 2 Task 3

Task 3: Import the OVA file to the On-Premises vCenter

Import the OVA file to the On-Premises vCenter

In this step we will import the HCX appliance into the on premises vCenter.

You can choose to do this Task in 2 different ways:

Step 1: Obtain your AVS vCenter Server credentials

- In your AVS Private Cloud blade click Identity.

- Locate and save both vCenter admin username cloudadmin@vsphere.local and password.

Step 2: Locate HCX Cloud Manager IP

- Click on + Add-ons.

- Copy the HCX Cloud Manager IP.

Step 3: Log in to HCX Cloud Manager IP

You will perform the instructions below from AVS VMware Environment

Open a browser tab and paste the HCX Cloud Manager IP and enter the credentials obtained in the previous step.

Step 4: Request Download Link for HCX OVA

- In the left pane click System Updates.

- Click REQUEST DOWNLOAD LINK, please keep in mind that the button might take a couple of minutes to become enabled.

Option 1: Download and deploy HCX OVA to on-premises vCenter

- Click VMWARE HCX to download the HCX OVA localy.

Option 2: Deploy HCX from a vCenter Content Library

- Click COPY LINK if you will install HCX with this method.

You will perform the instructions below from the On-premises VMware Environment

Step 1: Access Content Libraries from on-premises vCenter

Browse to the on-premises vCenter URL, See Getting Started section for more information and login details.

- From your on-premises vCenter click Menu.

- Click Content Libraries.

Step 2: Create a new Content Library

Create a new local content library if one doesn’t exist by clicking the + sign.

Step 3: Import Item to Content Library

- Click ACTIONS.

- Click Import Item.

- Enter the HCX URL copied in a previous step.

- Click IMPORT.

Accept any prompts and actions and proceed. The HCX OVA will download to the library in the background.

2.4 - Module 2 Task 4

Task 4: Deploy the HCX OVA to On-Premises vCenter

Deploy HCX OVA

In this step, we will deploy the HCX VM with the configuration from the On-Premises VMware Lab Environment section.

You will perform the instructions below from the On-premises VMware Environment

Step 1: Deploy HCX connector VM

If Option 1: Deploy OVA from download.

- Right-click Cluster-1.

- Click Deploy OVF Template.

- Click the button to point to the location of the downloaded OVA for HCX.

- Click NEXT.

If Option 2: Deploy HCX from Content Library

- Once the import is completed from the previous task, click Templates.

- Right Click the imported HCX template.

- Click New VM from This Template.

- Give your HCX Connector a name: HCX-OnPrem-X-Y, where X is your group number and Y is your participant number.

- Click NEXT.

Step 2: Name the HCX Connector VM

- Give your HCX Connector a name: HCX-OnPrem-X-Y, where X is your group number and Y is your participant number.

- Click NEXT.

Step 3: Assign the network to your HCX Connector VM

Keep the defaults for:

- Compute Resource

- Review details

- License agreements (Accept)

- Storage (LabDatastore)

- Click to select management network.

- Click NEXT.

Step 4: Customize template

| Property | Value |

|---|

| Hostname | Suggestion: HCX-OnPrem-X-Y) Note: Do not leave a space in the name as this causes the webserver to fail) |

| CLI “admin” User Password/root Password | MSFTavs1! |

| Network 1 IPv4 Address | 10.X.Y.9 |

| Network 1 IPv4 Prefix Length | 27 |

| Default IPv4 Gateway | 10.X.Y.1 |

| DNS Server list | 1.1.1.1 |

Step 5: Validate deployment

Once done, navigate to Menu > VM’s and Templates > Power on the newly created HCX Manager VM.

The boot process may take 10-15 minutes to complete.

2.5 - Module 2 Task 5

Task 5: Obtain HCX License Key

Obtain HCX License Key

While the HCX installation runs, we will need to obtain a license key to activate HCX. This is available from the AVS blade in the Azure Portal.

Step 1: Create HCX Key from Azure Portal

- Click + Add-ons.

- Click + Add.

- Give your HCX Key a name: HCX-OnPrem-X-Y, where X is your group number and Y your participant number.

- Click Yes.

Save the key, you will need it to activate it in your on-premises setup.

2.6 - Module 2 Task 6

Task 6: Activate VMware HCX

You will perform the instructions below from the On-premises VMware Environment

Activate VMware HCX

In this task, we will activate the On-Premises HCX appliance that we just deployed in Task 4.

Step 1: Log in to HCX Appliance Management Interface

- Browse to the On-Premises HCX Manager IP specified in Task 4 on port 9443 IP and login (Make sure you use https:// in the address bar in the browser).

- Login using the HCX Credentials specified in Task 4.

- Username:

admin - Password:

MSFTavs1! (Specified earlier in Task 4).

Step 2: Enter HCX Key

Once logged in, follow the steps below.

- Don’t change the HCX Activation Server field. Please keep as is.

- In HCX License Key field, please enter your key for HCX Activation Key that you obtains from AVS blade in Azure Portal.

- Lastly, select Activate. Please keep in mind that this process can take several minutes.

Step 3: Enter Datacenter Location, System Name

In Datacenter Location, provide the nearest biggest city to your location for installing the VMware HCX Manager On-Premises. Then select Continue. In System Name, modify the name to HCX-OnPrem-X-Y and click Continue.

Note: The city location does not matter in this lab. It’s just a named location for visualization purposes.

Step 4: Continue to complete configuration

Click “YES, CONTINUE” for completing next task. After a few minutes HCX should be successfully activated.

2.7 - Module 2 Task 7

Task 7: Configure HCX and connect to vCenter

You will perform the instructions below from the On-premises VMware Environment

In this section, we will integrate and configure HCX Manager with the On-Premises vCenter Server.

Step 1: Connect vCenter Server

- In Connect your vCenter, provide the FQDN or IP address of on-premises vCenter server and the appropriate credentials.

- Click CONTINUE.

- In Configure SSO/PSC, provide the same vCenter IP address: https://10.X.Y.2

- Click CONTINUE.

Step 3: Restart HCX Appliance

Verify that the information entered is correct and select RESTART.

The Reboot process might take up to 15 minutes

The reboot process might take between 10 to 15 minutes. Keep checking every 3-4 minutes to ensure you can get to HCX Manager.

- After the services restart, you’ll see vCenter showing as Green on the screen that appears. Both vCenter and SSO must have the appropriate configuration parameters, which should be the same as the previous screen.

- Next, click on Configuration to complete the HCX configuration.

- Click Configuration.

- Click HCX Role Mapping.

- Click Edit.

- Change User Groups value to match lab SSO configuration:

avs.lab\Administrators - Save changes.

Please note that by default HCX assigns the HCX administrator role to “vsphere.local\Administrators”. In real life, customers will have a different SSO domain than vsphere.local and needs to be changed. This is the case for this lab and this needs to be changed to avs.lab.

It may take an additional 5-10 minutes for the HCX plugins to be installed in vCenter, log back out and log back in if it does not show up automatically.

2.8 - Module 2 Task 8

Task 8: Create Site Pairing from On-premises HCX to AVS HCX

You will perform the instructions below from the On-premises VMware Environment

HCX Site Pairing

In this task, we will be creating the Site Pairing to connect the On-Premises HCX appliance to the AVS HCX appliance.

Step 1: Access On-Premises HCX

There are 2 ways to access HCX:

- Through the vCenter server plug-in. Click Menu -> HCX.

- Through the stand-alone UI. Open a browser tab and go to your local HCX Connector IP: https://10.X.Y.9

In either case, log in with your vCenter credentials:

- Username:

administrator@avs.lab - Password:

MSFTavs1!

NOTE: If working through vCenter Server, you may see a banner item to Refresh the browser, this will load the newly installed HCX modules. If you do not see this, log out and log back into vCenter.

Step 2: Connect to Remote Site

- Click Site Pairing in the left pane.

- Click CONNECT TO REMOTE SITE.

- Enter credentials for your AVS vCenter found in the Azure Portal. The Remote HCX URL is found under the Add-ons blade and it is NOT the vCenter URL.

- Click CONNECT.

- Accept certificate warning and Import

NOTE: Ideally the identity provided in this step should be an AD based credential with delegation instead of the cloudadmin account.

Connection to the remote site will be established.

2.9 - Module 2 Task 9

Task 9: Create network profiles

You will perform the instructions below from the On-premises VMware Environment

HCX Network Profiles

A Network Profile is an abstraction of a Distributed Port Group, Standard Port Group, or NSX Logical Switch, and the Layer 3 properties of that network. A Network Profile is a sub-component of a complete Compute Profile.

Customer’s environments may vary and may not have separate networks.

In this Task you will create a Network Profile for each network intended to be used with HCX services. More information can be found in VMware’s Official Documentation, Creating a Network Profile.

- Management Network - The HCX Interconnect Appliance uses this network to communicate with management systems like the HCX Manager, vCenter Server, ESXi Management, NSX Manager, DNS, NTP.

- vMotion Network - The HCX Interconnect Appliance uses this network for the traffic exclusive to vMotion protocol operations.

- vSphere Replication Network - The HCX Interconnect Appliance uses this network for the traffic exclusive to vSphere Replication.

- Uplink Network - The HCX Interconnect appliance uses this network for WAN communications, like TX/RX of transport packets.

These networks have been defined for you, please see below section.

In a real customer environment, these will have been planned and identified previously, see here for the planning

phase.

Step 1: Create 4 Network Profiles

- Click Interconnect.

- Click Network Profiles.

- Click CREATE NETWORK PROFILE.

In this lab, these are in the Network Profile Information section.

We will create 4 separate network profiles:

- Select Distributed Port Groups.

- Select Management Network.

- Enter the Management Network IP range from the table below. Remeber to replace X with your group number and Y with your participant number. Repeat the same steps for Replication, vMotion and Uplink Network profiles.

- Ensure the select the appropriate checkboxes depending on type of Network Profile you’re creating.

You should create a total of 4 Network Profiles.

Management Network Profile

| Property | Value |

|---|

| Management Network IP | 10.X.Y.10-10.X.Y.16 |

| Prefix Length | 27 |

| Management Network Gateway | 10.X.Y.1 |

Uplink Network Profile

| Property | Value |

|---|

| Uplink Network IP | 10.X.Y.34-10.X.Y.40 |

| Prefix Length | 28 |

| Uplink Network Gateway | 10.X.Y.33 |

| DNS | 1.1.1.1 |

vMotion Network Profile

| Property | Value |

|---|

| vMotion Network IP | 10.X.Y.74-10.X.Y.77 |

| Prefix Length | 27 |

| vMotion Network Gateway | 10.X.Y.65 |

| DNS | 1.1.1.1 |

Replication Network Profile

| Property | Value |

|---|

| Replication IP | 10.X.Y.106-10.X.Y.109 |

| Prefix Length | 27 |

| Replication Network Gateway | 10.X.Y.97 |

| DNS | 1.1.1.1 |

2.10 - Module 2 Task 10

Task 10: Create compute profiles

You will perform the instructions below from the On-premises VMware Environment

HCX Compute Profile

A compute profile contains the compute, storage, and network settings that HCX uses on this site to deploy the interconnected dedicated virtual appliances when service mesh is added. For more information on compute profile and its creation please refer to VMware documentation.

Step 1: Compute Profile Creation

- In your on-premises HCX installation, click Interconnect.

- Click Compute Profiles.

- Click CREATE COMPUTE PROFILE.

Step 2: Name Compute Profile

- Give your Compute Profile a Name. Suggestion: OnPrem-CP-X-Y, where X is your group number and Y is your participant number.

- Click CONTINUE.

Step 3: Select Services for Compute Profile

- Review the selected services. By default all the above services are selected. In a real world scenario, if a customer let’s say doesn’t need Network Extension, you would unselect that service here. Leave all defaults for the purpose of this workshop.

- Click CONTINUE.

Step 4: Select Service Resources

- Click the arrow next to Select Resource(s).

- In this on-premises simulation, you only have one Cluster called OnPrem-SDDC-Datacenter-X-Y. In a real world scenario, it’s likely your customer may have more than one Cluster. HCX Service Resources are resources from where you’d like HCX to either migrate or protect VMs from. Select the top level OnPrem-SDDC-Datacenter-X-Y.

- Click OK.

- Click CONTINUE.

Step 5: Select Deployment Resources

- Click the arrow next to Select Resource(s). Here you will be selecting the Deployment Resource, which is where the additional HCX appliances needing to be installed will be placed in the on-premises environment. Select OnPrem-SDDC-Cluster-X-Y.

- For Select Datastore click and select the LabDatastore that exists in your simulated on-premises environment. This will be the on-premises Datastore the additional HCX appliances will be placed in.

- (Optional) click to Select Folder in the on-premises vCenter Server where to place the HCX appliances. You can select vm for example.

- Interconnect Appliance Reservation Settings, here you would set CPU/Memory Reservations for these appliances in your on-premises vCenter Server.

- Leave the default 0% value.

- Click CONTINUE.

Step 6: Select Management Network Profile

- Select the Management Network Profile you created in a previous step.

- Click CONTINUE.

Step 7: Select Uplink Network Profile

Warning

Due to the current lab setup, please select network OnPrem-management-x-y. However, in typical production scenarios it is more likely to be the Uplink Network Profile.

- Select the Management Network Profile you created in a previous step. DO NOT select the uplink network profile, this network profile was created to simulate what an on-premises environment might look like, but the only functional uplink network for this lab is the Management Network.

- Click CONTINUE.

Step 8: Select vMotion Network Profile

- Select the vMotion Network Profile you created in a previous step.

- Click CONTINUE.

Step 9: Select vSphere Replication Network Profile

- Select the vSphere Replication Network Profile you created in a previous step.

- Click CONTINUE.

Step 10: Select Network Containers

- Click the arrow next to Select Network Containers.

- Select the virtual distributed switch you’d like to make eligible for Network Extension.

- Click CLOSE.

- Click CONTINUE.

Step 11: Review Connection Rules

- Review the connection rules.

- Click CONTINUE.

Step 12: Finish creation of Compute Profile

Click FINISH to create the compute profile.

Your Compute Profile is created successfully.

2.11 - Module 2 Task 11

Task 11: Create a service mesh

You will perform the instructions below from the On-premises VMware Environment

HCX Service Mesh Creation

An HCX Service Mesh is the effective HCX services configuration for a source and destination site. A Service Mesh can be added to a connected Site Pair that has a valid Compute Profile create on both of the sites.

Adding a Service Mesh initiates the deployment of HCX Interconnect virtual appliances on both sites. An interconnect Service Mesh is always created at the source site.

More information can be found inf VMware’s Official Documentation, Creating a Service Mesh.

Step 1: Create Service Mesh

- Click Interconnect.

- Click Service Mesh.

- Click CREATE SERVICE MESH.

Step 2: Select Sites

- Select the source site (on-premises).

- Select the destination site (AVS).

- Click CONTINUE.

Step 3: Select Compute Profiles

- Click to select Source Compute Profile which you recently created, click CLOSE.

- Click to select Remote Compute Profile from AVS side, click CLOSE.

- Click CONTINUE.

Step 4: Select Services to be Activated

Leave the Default Services and click CONTINUE.

Step 5: Advanced Configuration - Override Uplink Network Profiles

Warning

Due to the current lab setup, please select network OnPrem-management-x-y. However, in typical production scenarios it is more likely to be the Uplink Network Profile.

- Click to select the previously created Source Management Network Profile, click CLOSE. Even though you created an Uplink Network Profile, for the purpose of this lab, the management network is used for uplink.

- Click to select the Destination Uplink Network Profile (usually TNTXX-HCX-UPLINK), click CLOSE.

- Click CONTINUE.

Step 6: Advanced Configuration: Network Extension Appliance Scale Out

In Advanced Configuration – Network Extension Appliance Scale Out, keep the defaults and then click CONTINUE.

Step 7: Advanced Configuration - Traffic Engineering

In Advanced Configuration – Traffic Engineering, review, leave the defaults and click CONTINUE.

Step 8: Review Topology Preview

Review the topology preview and click CONTINUE.

Step 9: Ready to Complete

- Enter a name for your Service Mesh (SUGGESTION: HCX-OnPrem-X-Y, where X is your group number, Y your participant number).

- Click FINISH.

Note: the appliance names are derived from service mesh name (it’s the appliance prefix, essentially).

Step 10: Confirm Successful Deployment

The Service Mesh deployment will take 5-10 minutes to complete. Once successful, you will see the services as green. Click on VIEW APPLIANCES.

- You can also navigate by clicking Interconnect - Service Mesh.

- Click Appliances.

- Check for Tunnel Status = UP.

You’re ready to migrate and protect on-premises VMs to Azure VMware Solution using VMware HCX. Azure VMware Solution supports workload migrations (with or without a network extension). So you can still migrate workloads in your vSphere environment, along with On-Premises creation of networks and deployment of VMs onto those networks.

For more information, see the VMware HCX Documentation.

2.12 - Module 2 Task 12

Task 12: Network Extension

You will perform the instructions below from the On-premises VMware Environment

HCX Network Extension

You can extend networks between and HCX-activated on-premises environment and Azure VMware Solution (AVS) with HCX Network Extension.

With VMware HCX Network Extension (HCX-NE), you can extend a VM’s network to a VMware HCX remote site like AVS. VMs that are migrated, or created on the extended network at the remote site, behave as if they exist on the same L2 network segement a VMs in the source (on-premises) environment. With Network Extension from HCX, the default gateway for an extended network is only connected at the source site. Traffic from VMs in remote sites must be routed to a different L3 network will flow through the source site gateway.

With VMware HCX Network Extension you can:

- Retain the IP and MAC addresses of the VMs and honor existing network policies.

- Extend VLAN-tagged networks from a VMware vSphere Distributed Switch.

- Extend NSX segments.

For more information please visit VMware’s documentation for Extending Networks with VMware HCX.

Once the Service Mesh appliances have been deployed, the next important step is to extend the on-premises network(s) to AVS, so that any migrated VM’s will be able to retain their existing IP address.

Step 1: Network Extension Creation

- Click Network Extension.

- Click CREATE A NETWORK EXTENSION.

Step 2: Select Source Networks to Extend

- Select Service Mesh - Ensure you select your own Service Mesh you created in an earlier step.

- Select OnPrem-workload-X-Y network.

- Click NEXT.

- Destination First Hop Router

- If applicable, ensure your own NSX-T T1 router you created earlier is selected.

- Otherwise, select the TNT**-T1 router.

- Enter the Gateway IP Address / Prefix Length for the OnPrem-workload-X-Y network. You can find this information in the On-Premises Lab Environment section.

- Example: 10.X.1Y.129/25, where X is your group number and Y is your participant number.

- Ensure your own Extension Appliance is selected.

- Confirm your own T1 is selected under Destination First Hop Router.

- Click SUBMIT.

It might take 5-10 minutes for the Network Extension to complete.

Step 4: Confirm Status of Network Extension

Confirm the status of the Network Extension as Extension complete.

2.13 - Module 2 Task 13

Task 13: Migrate a VM using HCX vMotion

You will perform the instructions below from the On-premises VMware Environment

(Optional) Confirm HCX Health

You may perform some VMware HCX appliance basic health checks using HCX Central CLI (CCLI) commands before initiating migrations. The HCX Manager Central CLI is used for diagnostic information collection and secure connections to the Service Mesh. You may refer to these articles for additional information:

Troubleshooting VMware HCX and

Getting started with the HCX CCLI.

Migrate a VM using HCX vMotion

Now that your Service Mesh has deployed the additional appliances HCX will utilize successfully, you can now migrate VMs from your on-premises environment to AVS. In this module, you will migrate a test VM called Workload-X-Y-1 that has been pre-created for you in your simulated on-premises environment using HCX vMotion.

Exercise 1: Migrate VM to AVS

Step 1: Examine VM to be migrated

- Click the VMs and Templates icon in your on-premises vCenter Server.

- You will find the VM named Workload-X-Y-1, select it.

- Notice the IP address assigned to the VM, this should be consistent with the network you stretched using HCX in a previous exercise.

- Notice the name of the Network this VM is connected to: OnPrem-workload-X-Y.

- (Optional) You can start a ping sequence to check the connectivity from your workstation to the VM’s IP address.

Step 2: Access HCX Interface

- From the vCenter Server interface, click Menu.

- Click HCX.

You can also access the HCX interface by using its standalone interface (outside vCenter Server interface) by opening a browser tab to: https://10.X.Y.9, where X is your group number and Y is your participant number.

Step 3: Initiate VM Migration

- From the HCX interface click Migration in the left pane.

- Click MIGRATE.

Step 4: Select VMs for Migration

- Search for the location of your VM.

- Click the checkbox to select your VM named Workload-X-Y-1.

- Click ADD.

Step 5: Transfer and Placement of VM on Destination Site

Transfer and Placement options can be entered in 2 different ways:

- If you’ve selected multiple VMs to be migrated and all VMs will be placed/migrated with the same options, setting the options in the area with the green background will set the options for all VMs.

- To set the options individually per VM can be set and they can be different from each other.

- Click either GO or VALIDATE button. Clicking VALIDATE will validate that the VM can be migrated (This will not migrate the VM). Clicking GO will both validate and migrate the VM.

Use the following values for these options:

| Option | Value |

|---|

| Compute Container | Cluster-1 |

| Destination Folder | Discovered virtual machine |

| Storage | vsanDatastore |

| Format | Same format as source |

| Migration Profile | vMotion |

| Switchover Schedule | N/A |

Step 6: Monitor VM Migration

As you monitor the migration of your VM, keep an eye on the following areas:

- Percentage status of VM migration.

- Sequence of events as the migration occurs.

- Cancel Migration button (do not use).

Step 7: Verify Completion of VM Migration

Ensure your VM was successfully migrated. You can also check for the VM in your AVS vCenter to Ensure it was migrated.

Exercice 2: Migration rollback

Step 1: Reverse Migration

VMware HCX also supports Reverse Migration, migrating from AVS back to on-premises.

Important

All migrations, including reverse migrations must be initiated from the on-premises site.- Click Reverse Migration checkbox.

- Select the Discovered virtual machine folder.

- Select your same virtual machine to migrate back to on-premises.

- Click ADD.

Use the following values for these options:

| Option | Value |

|---|

| Compute Container | OnPrem-SDDC-Cluster-X-Y |

| Destination Folder | OnPrem-SDDC-Datacenter-X-Y |

| Storage | LabDatastore |

| Format | Same format as source |

| Migration Profile | vMotion |

| Switchover Schedule | N/A |

The rest of the steps are similar to what you did on Step 5.

Step 2: Verify Completion of VM Migration

Verify that the VM is back running on the On-Premises vCenter.

2.14 - Module 2 Task 14

Task 14: Migrate a VM using HCX Replication Assisted vMotion

You will perform the instructions below from the On-premises VMware Environment

HCX Replication Assisted vMotion (RAV) uses the HCX along with replication and vMotion technologies to provide large scale, parallel migrations with zero downtime.

HCX RAV provides the following benefits:

- Large-scale live mobility: Administrators can submit large sets of VMs for a live migration.

- Switchover window: With RAV, administrators can specify a switchover window.

- Continuous replication: Once a set of VMs is selected for migration, RAV does the initial syncing, and continues to replicate the delta changes until the switchover window is reached.

- Concurrency: With RAV, multiple VMs are replicated simultaneously. When the replication phase reaches the switchover window, a delta vMotion cycle is initiated to do a quick, live switchover. Live switchover happens serially.

- Resiliency: RAV migrations are resilient to latency and varied network and service conditions during the initial sync and continuous replication sync.

- Switchover larger sets of VMs with a smaller maintenance window: Large chunks of data synchronization by way of replication allow for smaller delta vMotion cycles, paving way for large numbers of VMs switching over in a maintenance window.

HCX RAV Documentation

Migrate a VM using HCX vMotion

As you are more comfortable now with HCX components, some steps will be less documented to provide you with the opportunity to discover new side of this tool by yourself.

Prerequisites

First thing, we need to check that Replication Assisted vMotion Migration feature is enabled on each of the following:

- AVS HCX Manager Compute Profile

- On premises Compute Profile

- On premises Service Mesh

For example:

If not enabled on one of the previous items, you need to:

- Edit the component

- Enable the Replication Assisted vMotion Migration capability

- Continue the wizard up to the Finish button (no other change is required)

- Click on Finish button to validate.

Note: Changes to the service mesh will require a few minutes to complete. You can look at Tasks tab to monitor the progress.

Exercise 1: Migrate VMs to AVS

Step 1: Initiate VMs migration

- From the HCX interface click Migration in the left pane.

- Click MIGRATE.

Step 2: Select VMs for Migration

- Search for the location of your VM.

- Click the checkbox to select your VM named Workload-X-Y-1 and Workload-X-Y-2.

- Click ADD.

Step 3: Transfer and Placement of VM on Destination Site

Use the following values for these options:

| Option | Value |

|---|

| Compute Container | Cluster-1 |

| Destination Folder | Discovered virtual machine |

| Storage | vsanDatastore |

| Format | Same format as source |

| Migration Profile | Replication-assisted vMotion |

| Switchover Schedule | N/A |

Click either GO or VALIDATE button. Clicking VALIDATE will validate that the VM can be migrated (This will not migrate the VM). Clicking GO will both validate and migrate the VM.

Step 4: Monitor VM Migration

As you monitor the migration of your VM, keep an eye on the following areas:

- Percentage status of VM migration.

- Sequence of events as the migration occurs.

- Cancel Migration button (do not use).

Step 5: Verify completion of VM Migration

Ensure your VM was successfully migrated. You can also check for the VM in your AVS vCenter to Ensure it was migrated.

Exercice 2: Migration rollback

Step 1: Reverse Migration with switchover scheduling

VMware HCX also supports Reverse Migration, migrating from AVS back to on-premises.

Important

All migrations, including reverse migrations must be initiated from the on-premises site.- Click Reverse Migration checkbox.

- Select the Discovered virtual machine folder.

- Select your same virtual machines to migrate back to on-premises.

- Click ADD.

Use the following values for these options:

| Option | Value |

|---|

| Compute Container | OnPrem-SDDC-Cluster-X-Y |

| Destination Folder | OnPrem-SDDC-Datacenter-X-Y |

| Storage | LabDatastore |

| Format | Same format as source |

| Migration Profile | Replication-assisted vMotion |

| Switchover Schedule | Specify a 1hr maintenance window timeframe starting at least 15 minutes from now. |

The rest of the steps are similar to what you did on Step 5.

Step 2: Monitor a scheduled VM migration

After few minutes, Replication-assisted vMotion will start replicating virtual disks of the virtual machines to the destination.

When ready, the switchover will not happen before entering the maintenance window timeframe provided in the migration wizard. VM is still running on the source side. In the interval, replication will continue to synchronize disk changes to the target side.

When the switchover scheduling is reached, the VM computation runtime, storage and network attachments will switch to the destination and the migration will complete with no downtime for the VM.

Note: The switchover may not happen as soon as we reach the maintenance window timeframe: it may take a few minutes to start.

After the switchover is completed, VM should be running in the destination.

2.15 - Module 2 Task 15

Task 14: Observe the effects of extended L2 networks with and without MON

You will perform the instructions below from the On-premises VMware Environment

HCX L2 extended networks are virtual networks that span across different sites, allowing VMs to keep their IP addresses and network configuration when migrated or failed over.

HCX provides:

- HCX Network Extension: This service creates an overlay tunnel between the sites and bridges the L2 domains, enabling seamless communication and mobility of VMs.

- HCX Mobility Optimized Networking (MON): Improves network performance and reduces latency for virtual machines that have been migrated to the cloud on an extended L2 segment. MON provides these improvements by allowing more granular control of routing to and from those virtual machines in the cloud.

Observe the effects of extended L2 networks with and without MON

Prerequisites

Please migrate one of the workload VM to AVS side. You can select the migration method of your choice.

The VM needs to be migrated and powered-on to continue the Lab Task.

Assess the current routing path

From workstation, run a traceroute to get a view on current routing path.

From a windows command line, run: tracert IP_OF_MIGRATED_VM

Last network hop before the VM should be the On Prem routing device: 10.X.1Y.8.

Tracing route to 10.1.11.130 over a maximum of 30 hops

1 23 ms 23 ms 22 ms 10.100.199.5

2 * * * Request timed out.

3 23 ms 23 ms 23 ms 10.100.100.65

4 * * * Request timed out.

5 * * * Request timed out.

6 * * * 10.1.1.8 # <------- On Premises router

7 25 ms 24 ms 24 ms 10.1.11.130 # <------- Migrated VM

Step 1: Enable Mobility Optimized Networking on existing network extension

From HCX console, select the Network Extension menu and expand the existing extended network.

Then activate the Mobility Optimized Networking button.

Accept the change by clicking on Enable when prompted for.

The change will take a few minutes to complete.

Step 4: Assess the current routing path after MON enablement

You can re-run a traceroute from jump server but no change to the routing path should be effective yet.

Step 5: Enable MON for the migrated VM

MON is effective at VM level, and so should be activated per VM (in an extended network where MON is already setup).

- From the Network Extension, and the expanded MON-enabled network, select the migrated VM.

- Select AVS side router location.

- Click on Submit.

By default, MON redirect all the flow from the migrated VM to On Prem if they are matching RFC1918 subnets.

- In the Lab setup, this is also a reason for the migrated VM to be unreachable at this stage if we do not customize Policy Routes.

- In a real world scenario, not configuring Policy Routes, is often a reason of asymmetric traffic as incoming and outgoing traffic for/from the migrated VM could be not using the same path.

We will customize the Policy Routes to ensure that traffic for 10.0.0.0/8 will use the AVS side router location.

From the HCX console:

- Select the Network Extension menu, then the Advanced menu and the Policy Routes item.

- In the popup, remove the

10.0.0.0/8 network and validate the change. - Wait a minute for the change to propagate.

Step 7: Assess the current routing path after MON enablement at VM level

You can re-run a traceroute from workstation and analyze the result:

Tracing route to 10.1.11.130 over a maximum of 30 hops

1 23 ms 23 ms 22 ms 10.100.199.5

2 * * * Request timed out.

3 23 ms 23 ms 23 ms 10.100.100.65

4 * * * Request timed out.

5 * * * Request timed out.

6 24 ms 23 ms 23 ms 10.1.11.130 # <------- Migrated VM

There should be no 10.X.1Y.8 (as last hop) anymore as flow is directly routed by NSX to the target AVS VM with the help of a /32 static route set by HCX at the NSX-T T1 GW level.

2.16 - Module 2 Task 16

Task 15: Achieving a migration project milestone by cutting-over network extension

You will perform the instructions below from the On-premises VMware Environment

In a migration strategy, HCX L2 extended networks can be used to facilitate the transition of workloads from one site to another without changing their IP addresses or disrupting their connectivity. This can reduce the complexity and risks associated with reconfiguring applications, firewalls, DNS, and other network-dependent components.

Once a subnet is free from other resources On Premises, this is important to consider the last phase of the migration project: the network extension cutover.

This operation will provide a direct AVS connectivity for migrated workload, only relying on NSX-T components, and not anymore on the combination of HCX and On Premises network components.

In a large migration project, the cutover can be done network by network, based on the rhythm of workload migrations. When a subnet is free from On Premises resources, and firewall policies effective on AVS side, the cutover operation can be proceeded.

Achieving a migration project milestone by cutting-over network extension

Step 1: Migrate remaining workload to AVS

Ensure that all the On Premises workload attached to the extended network are migrated on AVS, except the router VM and the HCX-OnPrem-X-Y-NE-I1 appliance(s).

If not: migrate the VM to AVS.

Note: You can keep or remove MON on the extended network for the current exercice. It should not affect the end result.

Step 2: Start the network extension removal process

From HCX console, select the Network Extension menu. Select the Extended network and click on Unextend network.

In the next wizard, ensure that the Cloud Edge Gateway will be connected at the end of removal operation. Then submit.

You can proceed to the next step while the current operation is running.

Step 3: Shutdown On Premises network connectivity to the subnet

For the current lab setup, the On Premises connectivity to the migrated subnet is handled by a Static Route set on an NSX-T T1 gateway. We will remove this rule to simulate the end of BGP route advertising from On Premises.

On a real-world scenario, the change could be to shutdown a virtual interface on a router or a Firewall device to achieve the end of BGP route advertising for the subnet.

- Connect to NSX-T Manager console by using credentials from AVS SDDC Azure UI.

- Click on Networking tab, then Tier-1 Gateways section.

- Select the TNTXX-T1 gateway and start the edition of the component.

- From the edit pane, click on the Static Routes link to edit this section (the number will vary depending on the number of effectives routes).

- Remove the route associated with your Lab based on its name and the target network.

- Validate the change.

- Monitor the process of the network extension removal on HCX.

Step 5: Network connectivity checks

When operation is ended, check the network connectivity to one of the migrated VM on the subnet.

You can use a ping from your workstation up to the VM IP address.

The connectivity should be ok.

Exercice 2: Rollback if needed!

Note: The following content is only there to provide guidance in case you need or choose to roll back the network setup to its previous configuration.

Step 1: Roll back changes

Running this step is not part of the Lab, except if you are facing issues.

If needed, network extension can be recreated, with same settings (especially the network prefix), and the route recreated to roll back a change.

To recreate the network extension, refer to the settings of Task 12.

If you need to recreate the static route on NSX-T, specify the following settings:

| Option | Value |

|---|

| Name | Nested-SDDC-Lab-X-Y |

| Network | 10.X.1Y.128/27 |

| Next hop / IP address | 10.X.Y.8 |

| Next hop / Admin Distance | 1 |

| Next hop / Scope | Leave empty |

After the route re-creation, the connectivity via On Premises routing device should be restored to reach your workloads on the extended segment. You can roll back VM to On Premises too if needed.

3 - Module 3 - VMware Site Recovery Manager (SRM)

Module 3: Setup SRM for Disaster Recovery to AVS

Site Recovery Manager

VMware Site Recovery Manager (SRM) for Azure VMware Solution (AVS) is an add-on that customers can purchase to protect their virtual machines in the event of a disaster. SRM for AVS allows customers to automate and orchestrate the failover and failback of VMs between an on-premises environment and AVS, or between two AVS sites.

For more information on VMware Site Recovery Manager (SRM), visit VMware’s official documentation for Site Recovery Manager.

This module walks through the implementation of a disaster recovery solution for Azure VMware Solution (AVS), based on VMware Site Recovery Manager (SRM).

Click here if you’d like to see 10 minutes demo for SRM on AVS.

What you will learn

In this module, you will learn how to:

- Install Site Recovery Manager in an AVS Private Cloud.

- Create a site pairing between two AVS Private Clouds in different Azure regions.

- Configure replications for AVS Virtual Machines.

- Configure SRM protection groups and recovery plans.

- Test and execute recovery plans.

- Re-protect recovered Virtual Machines and execute fail back.

Prerequisite knowledge

- AVS Private Cloud administration (Azure Portal).

- AVS network architecture, including connectivity across private clouds in different regions based on Azure ExpressRoute Global Reach.

- Familiarity with disaster recovery DR concepts such as Recovery Point Objective (RPO) and Recovery Time Objective (RTO).

- Basic concepts of Site Recovery Manager and vSphere Replication.

Module scenario

In this module, two AVS Private Clouds are used. VMware Site Recovery Manager will be configured at both sites to replicate VMs in the protected site to the recovery site.

Group X is your original assigned group, Group Z is the group you will be using as a Recovery site, for example, Group 1 will be using Group 2’s SDDC as a Recovery site.

For Example:

| Private Cloud Name | Location | Role |

|---|

| GPSUS-PARTNERX-SDDC | Brazil South | Protected Site |

| GPSUS-PARTNERZ-SDDC | Brazil South | Recovery Site |

The two private clouds should have been already interconnected with each other in Module 1, using ExpressRoute Global Reach or AVS Interconnect. The diagram below depicts the topology of the lab environment.

Recovery Types with SRM

VMware Site Recovery Manager (SRM) is a business continuity and disaster recovery solution that helps you plan, test, and run the recovery of virtual machines between a protected vCenter Server site and a recovery vCenter Server site. You can use Site Recovery Manager to implement different types of recovery from the protected site to the recovery site:

Planned Migration

- Planned migration: The orderly evacuation of virtual machines from the protected site to the recovery site. Planned migration prevents data loss when migrating workloads in an orderly fashion. For planned migration to succeed, both sites must be running and fully functioning.

Disaster Recovery

- Disaster recovery: Similar to planned migration except that disaster recovery does not require that both sites be up and running, for example if the protected site goes offline unexpectedly. During a disaster recovery operation, failure of operations on the protected site is reported but is otherwise ignored.

Site Recovery Manager orchestrates the recovery process with VM replication between the protected and the recovery site, to minimize data loss and system down time. At the protected site, Site Recovery Manager shuts down virtual machines cleanly and synchronizes storage, if the protected site is still running. Site Recovery Manager powers on the replicated virtual machines at the recovery site according to a recovery plan. A recovery plan specifies the order in which virtual machines start up on the recovery site. A recovery plan specifies network parameters, such as IP addresses, and can contain user-specified scripts that Site Recovery Manager can run to perform custom recovery actions on virtual machines.

Site Recovery Manager lets you test recovery plans. You conduct tests by using a temporary copy of the replicated data in a way that does not disrupt ongoing operations at either site.

Site Recovery Manager supports both hybrid (protected site on-prem, recovery site on AVS) and cloud-to-cloud scenarios (protected and recovery sites on AVS, in different Azure regions). This lab covers the cloud-to-cloud scenario only.

Site Recovery Manager is installed by deploying the Site Recovery Manager Virtual Appliance on an ESXi host in a vSphere environment. The Site Recovery Manager Virtual Appliance is a preconfigured virtual machine that is optimized for running Site Recovery Manager and its associated services. After you deploy and configure Site Recovery Manager instances on both sites, the Site Recovery Manager plug-in appears in the vSphere Web Client or the vSphere Client. The figure below shows the high-level architecture for a SRM site pair.

vSphere Replication

SRM can work with multiple replication technologies: Array-based replication, vSphere (aka host-based) replication, vVols replication and a combination of array-based and vSphere replication (learn more).